The scope and tasks of this initial AFE were intended to address the following fundamental certification questions:

- What are the performance-based objectives an applicant should satisfy to demonstrate the system with the machine learning meets its intended function in all foreseeable operating conditions, recognizing the foreseeable operating conditions may need to be bounded?

- What are the methods for determining a training data set is a) correct, and b) complete?

- When is machine learning ‘retraining” needed and how is the extent of the retraining determined? How much retraining is required, for example, if changes are made to the sensors, neural net structure, or neural net activation functions, etc.?

- Can the simplex architecture monitors work for all machine learning applications to include the very complex? What are the conditions when a simplex architecture cannot work?

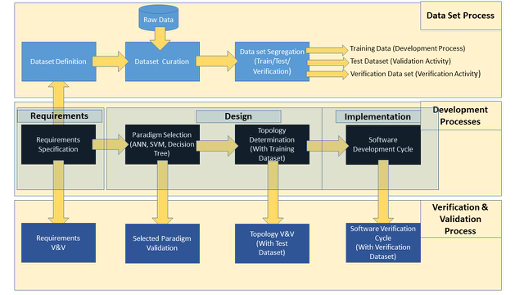

The project members investigated existing verification methods, resulting in an extensive bibliography. These references were analyzed to inform the definition of a Machine Learning Process Flow (see figure).

Additionally, methods for bounding the behavior of ML-based systems were investigated. The project findings were compiled in a final report that is available for download.